When SERP Keywords and LLM Terms Overlap (and When They Don’t)

Topic: SEO

Published:

Written by: Bernard Huang

For more than two decades, search visibility followed a relatively stable contract. You identified keywords, created relevant content, earned rankings, and captured traffic. Even as algorithms evolved, the underlying mechanics of discovery remained consistent. If you ranked, you were visible.

That contract no longer holds.

The shift from retrieval-based search to generative systems has introduced a new layer of complexity. Content can now meaningfully contribute to user answers without ever earning a click. Visibility, once tightly coupled to rankings and traffic, is becoming increasingly decoupled from both.

This has raised a common and reasonable question among SEO teams: If I’m optimizing for SERP keywords, am I also optimizing for large language models?

The answer is: sometimes. And increasingly, not always.

What We Mean by “SERP Keywords” and “LLM Terms”

To understand where optimization still works (and where it breaks) we need to distinguish between two related but fundamentally different types of language.

SERP Terms: The Language of Rankings

SERP terms are the familiar inputs of traditional SEO. They are the keywords and phrases users type into search engines and the terms content teams optimize against to earn rankings.

These terms are:

Query-driven

Measured through rankings, search volume, and competition

Optimized at the page level

Evaluated primarily through relevance and authority signals

For years, mastering this language was sufficient. If you could rank for the right terms, you could reliably generate traffic.

LLM Terms: The Language of Understanding

LLM terms operate at a different layer.

They are not limited to explicit queries or exact phrasing. Instead, they consist of concepts, entities, relationships, and contextual signals that language models extract when forming answers.

These terms are:

Concept-driven rather than query-driven

Often implicit rather than explicit

Evaluated across multiple sources and contexts

Interpreted at the topic or brand level, not page-by-page

LLMs are not asking which page best matches a keyword. They are asking which sources help them construct the most complete and confident answer.

Why High Overlap Still Matters

Despite these differences, there is still meaningful overlap between SERP terms and LLM terms. And that overlap remains important.

Where SERP and LLM Language Naturally Align

High overlap tends to occur in areas where:

Topics are well-defined and stable

Terminology is consistent across the web

User intent is primarily informational

Expert consensus exists

In these cases, content that ranks well often provides the same foundational language models rely on to generate answers.

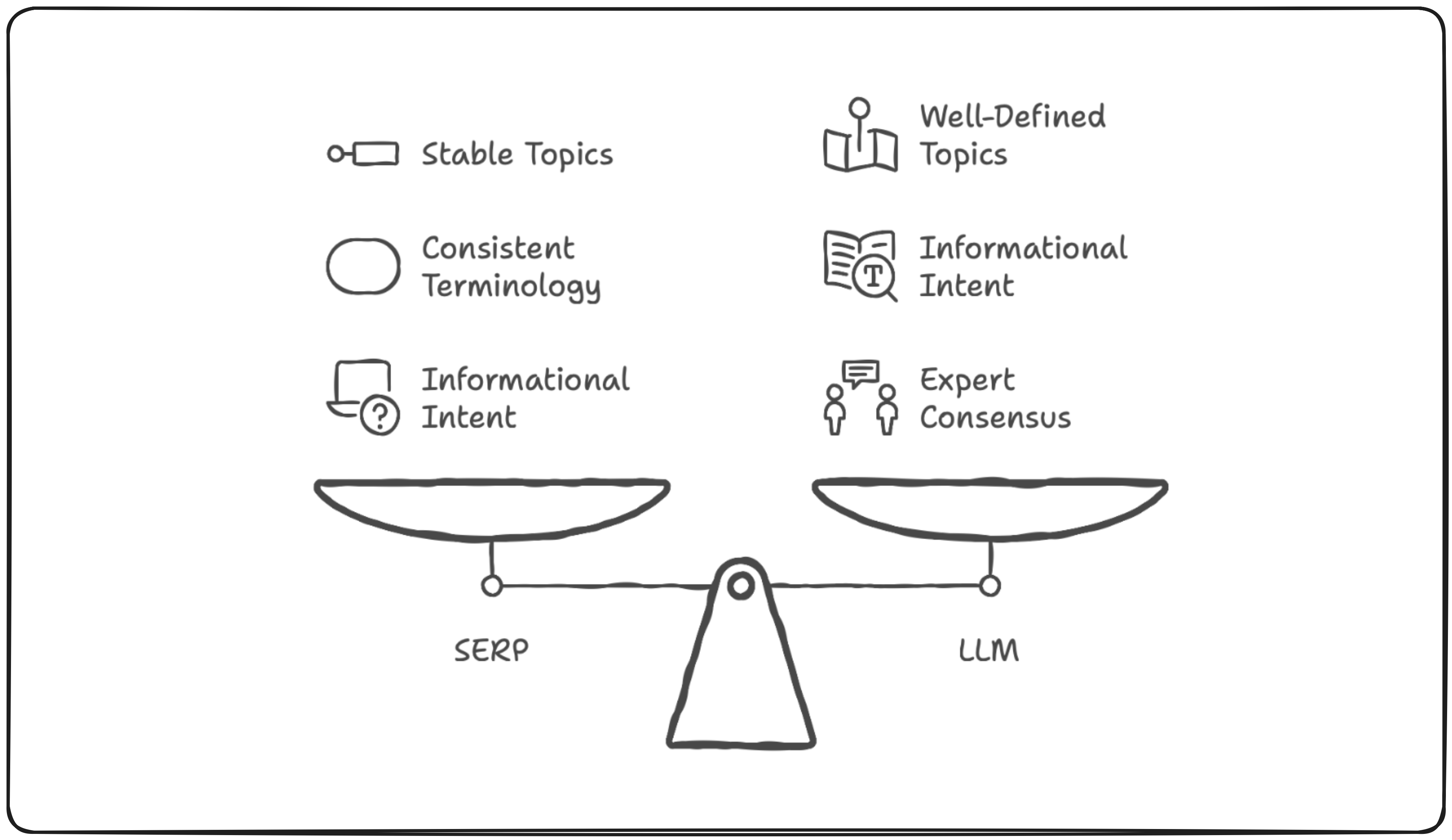

Why Ranking Content Still Feeds AI Systems

LLMs are trained on large portions of the public web. High-ranking content frequently appears in that training data and serves as a strong signal of relevance and credibility.

As a result, traditional SEO work often creates baseline eligibility for AI visibility. Ranking still matters, but now it functions more as an entry point than a guarantee.

Where the Overlap Breaks Down

The limitations of keyword-first optimization become more visible as queries grow more complex.

Long Long Tail and Implied-Intent Queries

Many modern queries are not explicitly phrased. Users ask incomplete questions, stack constraints, or expect systems to infer intent.

LLMs excel in these situations because they reason across concepts rather than matching strings. Content optimized narrowly around specific keywords often fails to surface because it does not address the underlying intent holistically.

Fragmented Content and Narrow Optimization

Pages designed primarily to rank often explain just enough to satisfy an algorithm, not enough to satisfy a model.

When content:

Covers a topic in isolation

Avoids adjacent questions

Lacks connective context

It may rank, but it rarely becomes a trusted source for synthesis.

Brand Inconsistency Across Topics

Language models build internal representations of entities. When a brand appears inconsistently across related topics or only surfaces in narrow contexts it becomes difficult for models to associate that brand with authority.

Strong pages cannot compensate for weak or missing coverage elsewhere.

Why High Keyword Coverage Is Not the Same as High AI Visibility

This distinction is subtle but critical. Traditional SEO treats visibility as a retrieval problem: if a page matches a query and earns enough authority, it ranks. Language models operate differently. They are not retrieving pages to present options; they are assembling answers. In that process, the unit of value shifts from the page to the concept. A piece of content can rank well because it satisfies a narrow query and still fail to meaningfully contribute to a model’s understanding of the topic.

What surfaces in AI-generated answers is not just what matches keywords, but what explains ideas clearly, consistently, and with minimal uncertainty. Optimization, as a result, is no longer only about being found—it is about being understood.

Ranking Is a Retrieval Signal, Not an Understanding Signal

Search engines retrieve. Language models interpret. Ranking answers the question: Which page best matches this query? But LLMs answer a different one: Which sources help me explain this clearly and confidently?

In other words, the best-ranking page is not always the best explanatory source.

LLMs Optimize for Confidence, Not Clicks

Generative systems are designed to reduce uncertainty. They favor sources that:

Explain concepts consistently

Reinforce information across contexts

Align with broader topic understanding

Visibility emerges from coherence and repetition, not from isolated wins.

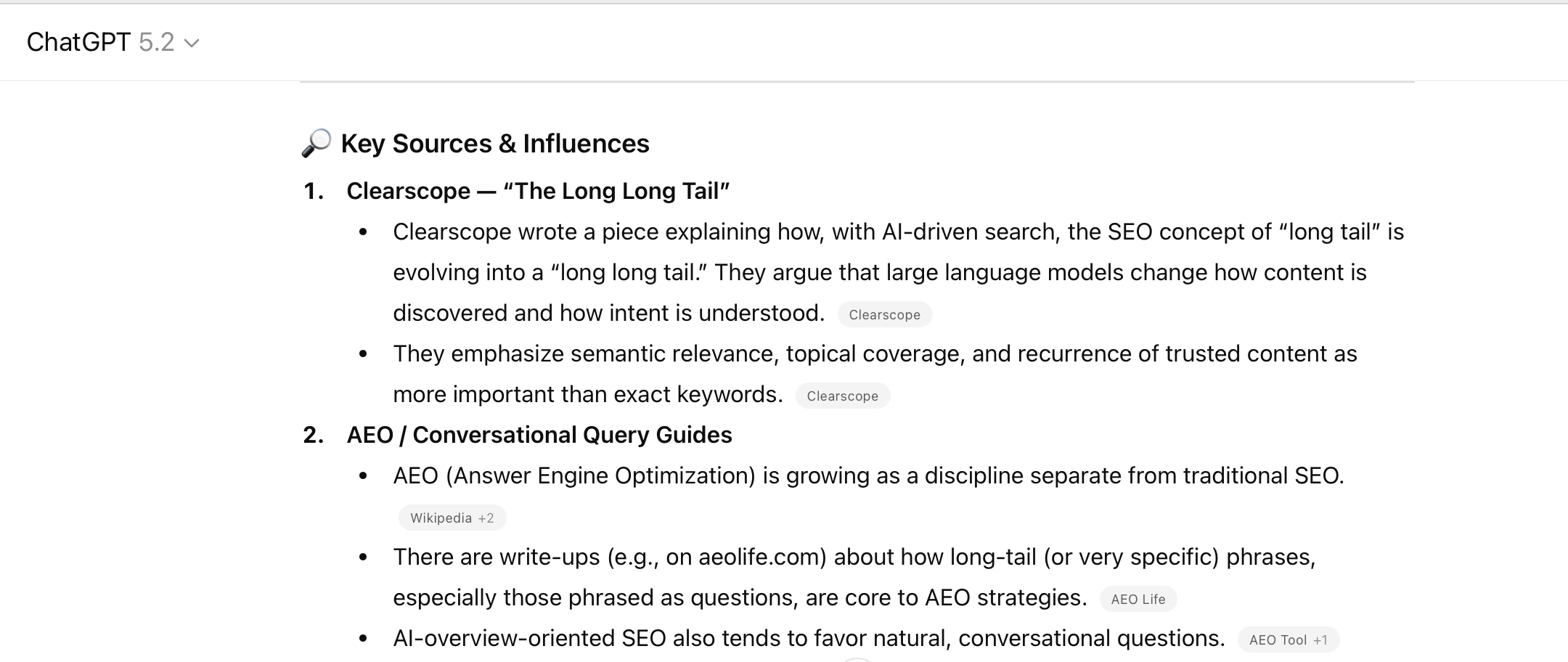

A Practical Signal of How This Works in the Real World

We’ve seen this dynamic play out in our own writing. After publishing a thought leadership piece that clearly defined what we call the “long long tail”—a way to describe how generative systems expand the surface area of discovery beyond what traditional keyword strategies can capture—the concept began appearing organically in responses from AI tools to SEO-related questions.

There was no page optimized to rank for the term. No campaign designed to promote it. What changed was not keyword coverage, but conceptual clarity. By naming a pattern and explaining it consistently, the idea became easier for models to recognize, recall, and reuse.

That outcome is not unique to us. It is a signal of how language models internalize expertise. Concepts that are well-defined, repeatedly reinforced, and situated within a broader topic framework are more likely to surface than those that exist only as isolated pages or tactical keywords.

How LLMs Decide Which Brands to Reference

Understanding this shift requires thinking beyond individual URLs. Language models do not evaluate content in isolation; they build representations of topics by observing how ideas, terms, and entities appear across many contexts. Visibility, in this environment, emerges from consistent participation in a topic, not from the performance of any single page.

Entity Recognition and Topic Ownership

Models look for patterns:

What topics a brand consistently appears alongside

Whether its expertise is clearly defined

How often it contributes foundational knowledge

Authority is inferred through presence, not proclamation.

Semantic Completeness Over Exact Match

Exact keywords matter less than conceptual coverage. Brands that address are easier for models to trust when generating answers.

Core concepts

Supporting ideas

Edge cases and implications

What This Means for Modern SEO Teams

The implications are not about abandoning SEO, but about expanding its scope.

SERP Optimization Is the Floor, Not the Ceiling

Ranking remains necessary. It is no longer sufficient.

Traffic volatility is not a temporary disruption. It reflects a structural shift in how discovery works.

Optimization Must Move Beyond Pages

Teams need to think in terms of:

Topics instead of URLs

Consistency instead of one-off wins

Visibility instead of clicks alone

The goal is no longer just to rank, but to be understood.

The New Question to Ask

The most important shift is not tactical. It is conceptual. For years, optimization success was defined by whether a page ranked for a target query. That question still matters, but it no longer captures how visibility actually works. The more useful question now is not “Do we rank for this?” but “Would a language model trust us to explain this?”

That question changes how content is planned, evaluated, and measured. It reframes optimization around authority, coherence, and completeness—qualities that matter whether discovery happens through links or answers.

The future of visibility belongs to brands that recognize this distinction early and build accordingly.

The Future of SEO Is Conversational

Search is now a conversation. Learn how to get cited by ChatGPT, Gemini & Perplexity—and why AEO is the new SEO.

Read moreWhat Is AIO? A Beginner’s Guide to Artificial Intelligence Optimization

Learn what Artificial Intelligence Optimization (AIO) is and why it’s the future of digital marketing. Discover how to optimize your content for AI tools, chatbots, LLMs, and search engines to stay visible and competitive.

Read moreThe New Frontier of SEO: Why AI Optimization Is About Intelligence, Not Automation

A deep look at why the future of SEO depends on intentional, AI-informed strategy—not automated content production—and how brands can build recognition across the long-long tail of AI discovery.

Read more